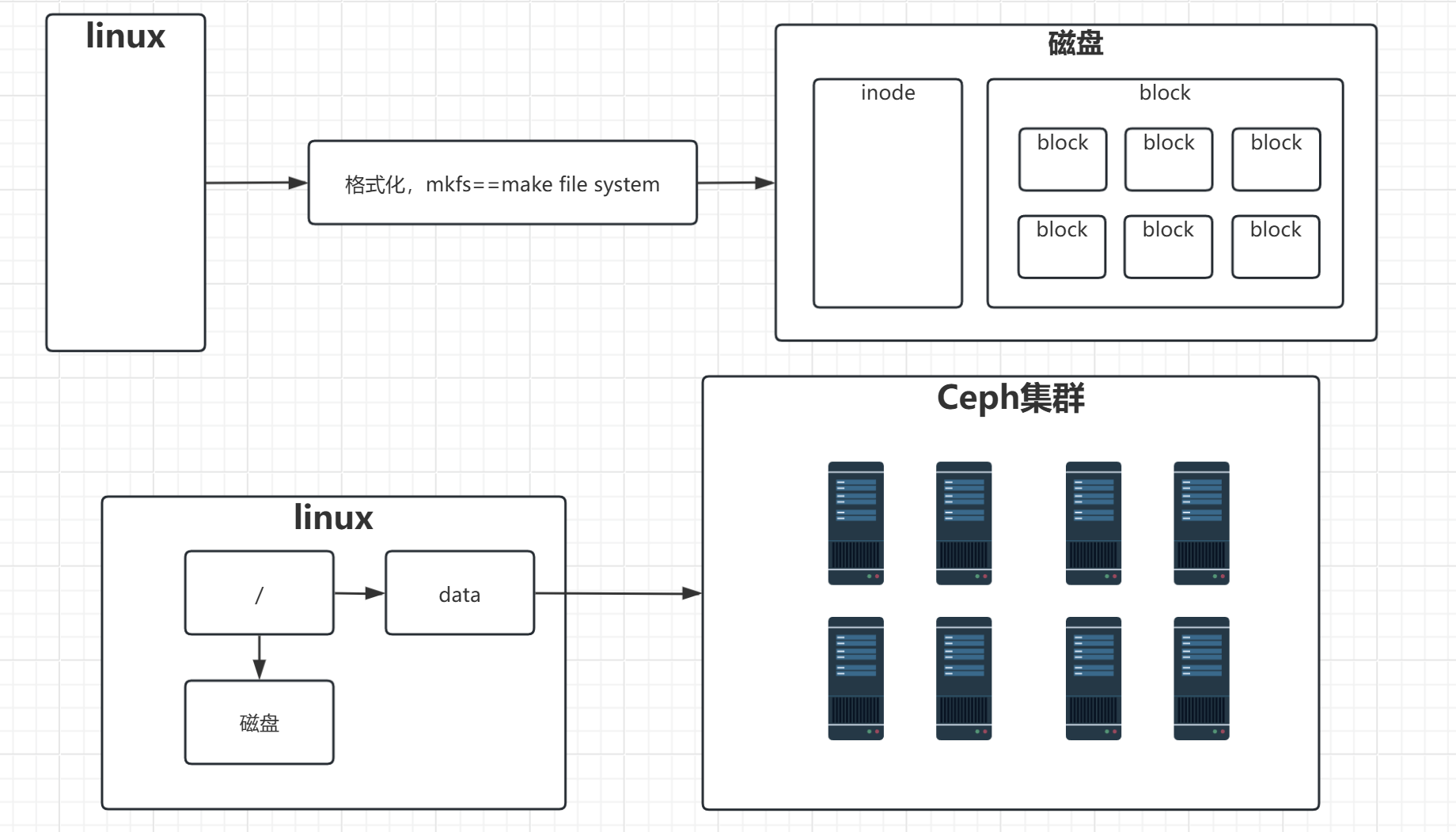

一、为什么用Ceph分布式存储

.png)

二、ceph的组件⭐️

三、ceph存储的分类⭐️⭐️

四、Ceph存储原理(了解)

-ofJv.png)

五、集群规模

1,生产环境

2,企业级别性能要求

3,学习环境

六、部署ceph集群

0,三台节点插入磁盘

300g

500g

1T

# 验证是否插入成功

[root@ceph01 ~]# ls -l /dev/sd*

brw-rw---- 1 root disk 8, 0 Aug 11 18:09 /dev/sda

brw-rw---- 1 root disk 8, 1 Aug 11 18:09 /dev/sda1

brw-rw---- 1 root disk 8, 2 Aug 11 18:09 /dev/sda2

brw-rw---- 1 root disk 8, 3 Aug 11 18:09 /dev/sda3

brw-rw---- 1 root disk 8, 16 Aug 11 18:09 /dev/sdb

brw-rw---- 1 root disk 8, 32 Aug 11 18:09 /dev/sdc

brw-rw---- 1 root disk 8, 48 Aug 11 18:09 /dev/sdd

[root@ceph01 ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINTS

loop0 7:0 0 40.4M 1 loop /snap/snapd/20671

loop1 7:1 0 63.9M 1 loop /snap/core20/2105

loop2 7:2 0 87M 1 loop /snap/lxd/27037

sda 8:0 0 100G 0 disk

├─sda1 8:1 0 1M 0 part

├─sda2 8:2 0 2G 0 part /boot

└─sda3 8:3 0 98G 0 part

├─ubuntu--vg-lv--0 253:0 0 8G 0 lvm [SWAP]

└─ubuntu--vg-lv--1 253:1 0 90G 0 lvm /

sdb 8:16 0 300G 0 disk

sdc 8:32 0 500G 0 disk

sdd 8:48 0 1T 0 disk

sr0 11:0 1 2G 0 rom 1,docker环境部署

· 下载上传解压二进制安装包

[root@ceph01 ~]# rz -E

[root@ceph01 ~]# tar xf docker-20.10.24.tgz

[root@ceph01 ~]# chown root.root docker/*

[root@ceph01 ~]# cp docker/* /usr/bin/

· 配置system启动

[root@ceph01:~]# vim /lib/systemd/system/docker.service

[Unit]

Description=xinjizhiwa docker

Documentation=https://www.xinjizhiwa.com

#启动docker时,需要什么程序已经准备好???(没启动成功页没关系)

After=network-online.target containerd.service

#如果这个程序没准备好,依旧可以启动docker

Wants=network-online.target

[Service]

Type=notify #systemd配置,表示,docker进程启动好running/active之后再进行下一步操作;

#服务启动时执行的命令

ExecStart=/usr/bin/dockerd

# 重启进程,$MAINPID表示docker的pid变量(systemd生成的)

ExecReload=/bin/kill -s HUP $MAINPID

TimeoutSec=0 #docker停止时等待时间,0表示伊吾县等待其优雅停止,避免强制杀死

LimitNOFILE=infinity #不限制文件打开数

LimitNPROC=infinity #不限制系统进程打开数

[Install]

WantedBy=multi-user.target

[root@ceph01 ~]# systemctl enable --now docker.service· docker内核转发

echo "net.ipv4.ip_forward=1" >> /etc/sysctl.conf· 同步其他节点

[root@ceph01 ~]# scp docker/* 10.0.0.102:/usr/bin/

[root@ceph01 ~]# scp docker/* 10.0.0.103:/usr/bin/

[root@ceph01 ~]# scp /lib/systemd/system/docker.service 10.0.0.102:/lib/systemd/system/

[root@ceph01 ~]# scp /lib/systemd/system/docker.service 10.0.0.103:/lib/systemd/system/

[root@ceph02 ~]# systemctl daemon-reload

[root@ceph02 ~]# systemctl enable --now docker.service

[root@ceph03 ~]# systemctl daemon-reload

[root@ceph03 ~]# systemctl enable --now docker.service2,部署ceph

· 下载cephadm

https://download.ceph.com/rpm-19.2.3/el9/noarch/.png)

[root@ceph01 ~]# rz -E

[root@ceph01 ~]# ls -l

。。。

-rwxr-xr-x 1 root root 787672 Aug 11 18:00 cephadm· 设置cephadm执行权限

[root@ceph01 ~]# chmod +x cephadm · 设置集群主机hosts解析

[root@ceph01 ~]# vim /etc/hosts

[root@ceph02 ~]# vim /etc/hosts

[root@ceph03 ~]# vim /etc/hosts

。。。。。

10.0.0.101 ceph01

10.0.0.102 ceph02

10.0.0.103 ceph03· 将cephadm移动到PATH路径

[root@ceph01 ~]# cp cephadm /usr/bin/· 初始化mon主节点

[root@ceph01 ~]# cephadm bootstrap --mon-ip 10.0.0.101 --cluster-network 10.0.0.0/24 --allow-fqdn-hostname

。。。。。

URL: https://ceph01:8443/

User: admin

Password: abj1r8mja0

。。。。。

# 首次登录将密码修改为:

- ceph1234563,客户端管理集群

· 客户端命令管理

# 添加ceph源

[root@ceph01 ~]# cephadm add-repo --release squid

# 替换清华源地址

[root@ceph01 ~]# vim /etc/apt/sources.list.d/ceph.list

deb https://mirrors.tuna.tsinghua.edu.cn/debian-squid/ jammy main

# 安装客户端管理命令

[root@ceph01 ~]# apt -y install ceph-common

# 查看集群状态

[root@ceph01 ~]# ceph -s

cluster:

id: c0c1e638-769b-11f0-90d7-8dc9d334b679

health: HEALTH_WARN

OSD count 0 < osd_pool_default_size 3

services:

mon: 1 daemons, quorum ceph01 (age 9m)

mgr: ceph01.izjspq(active, since 7m)

osd: 0 osds: 0 up, 0 in

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 B

usage: 0 B used, 0 B / 0 B avail

pgs: · 容器管理方法

[root@ceph01 ~]# cephadm shell

。。。。。。

root@ceph01:/# ceph -s

cluster:

id: c0c1e638-769b-11f0-90d7-8dc9d334b679

health: HEALTH_WARN

OSD count 0 < osd_pool_default_size 3

services:

mon: 1 daemons, quorum ceph01 (age 10m)

mgr: ceph01.izjspq(active, since 8m)

osd: 0 osds: 0 up, 0 in

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 B

usage: 0 B used, 0 B / 0 B avail

pgs: · 查看集群列表

[root@ceph01 ~]# ceph orch host ls

HOST ADDR LABELS STATUS

ceph01 10.0.0.101 _admin

1 hosts in cluster· 添加集群主机

1,免密要登录集群主机

[root@ceph01 ~]# ssh-copy-id -f -i /etc/ceph/ceph.pub ceph02

[root@ceph01 ~]# ssh-copy-id -f -i /etc/ceph/ceph.pub ceph032,添加主机到集群

[root@ceph01 ~]# ceph orch host add ceph02 10.0.0.102

[root@ceph01 ~]# ceph orch host add ceph03 10.0.0.103

[root@ceph01 ~]# ceph orch host ls

HOST ADDR LABELS STATUS

ceph01 10.0.0.101 _admin

ceph02 10.0.0.102

ceph03 10.0.0.103

3 hosts in cluster查看页面

· 驱逐集群主机

1,驱逐主机

[root@ceph01 ~]# ceph orch host drain ceph03

Scheduled to remove the following daemons from host 'ceph03'

type id

-------------------- ---------------

ceph-exporter ceph03

crash ceph03

node-exporter ceph03

mon ceph03

# 查看集群列表

[root@ceph01 ~]# ceph orch host ls

HOST ADDR LABELS STATUS

ceph01 10.0.0.101 _admin

ceph02 10.0.0.102

ceph03 10.0.0.103 _no_schedule,_no_conf_keyring

3 hosts in cluster

# _no_schedule 不可调度

# _no_conf_keyring 没有链接秘钥2,删除备驱逐的主机

[root@ceph01 ~]# ceph orch host rm ceph03

[root@ceph01 ~]# ceph orch host ls

HOST ADDR LABELS STATUS

ceph01 10.0.0.101 _admin

ceph02 10.0.0.102

2 hosts in cluster3,删除历史数据(标准操作)

[root@ceph03 ~]# ll /var/lib/ceph/

total 12

drwxr-xr-x 3 root root 4096 Aug 12 15:26 ./

drwxr-xr-x 43 root root 4096 Aug 12 15:26 ../

drwx------ 4 167 167 4096 Aug 12 15:32 c0c1e638-769b-11f0-90d7-8dc9d334b679/

[root@ceph03 ~]# ll /var/log/ceph/

total 112

drwxr-xr-x 3 root root 4096 Aug 12 15:30 ./

drwxr-xr-x 11 root syslog 4096 Aug 12 15:26 ../

drwxrwx--- 2 167 167 4096 Aug 12 15:30 c0c1e638-769b-11f0-90d7-8dc9d334b679/

-rw-r--r-- 1 root root 97155 Aug 12 15:34 cephadm.log4,在添加回来

[root@ceph01 ~]# ceph orch host add ceph03 10.0.0.103

Added host 'ceph03' with addr '10.0.0.103'

[root@ceph01 ~]# ceph orch host ls

HOST ADDR LABELS STATUS

ceph01 10.0.0.101 _admin

ceph02 10.0.0.102

ceph03 10.0.0.103

3 hosts in cluster七、OSD管理

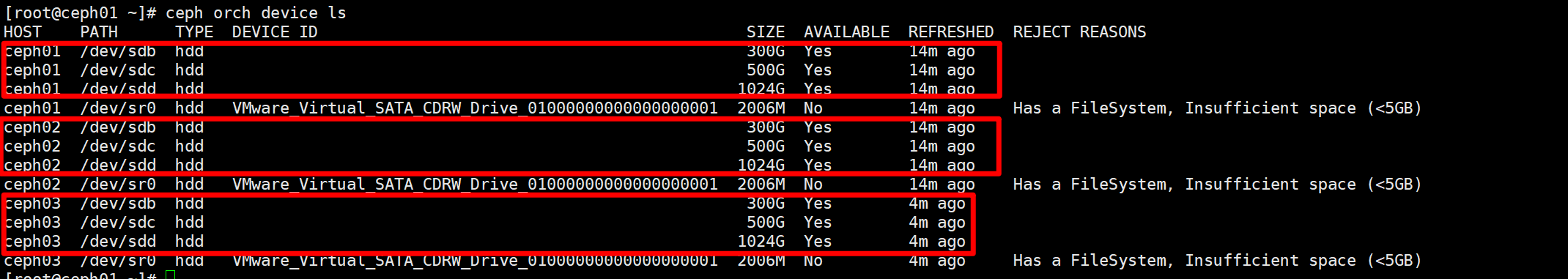

1,查看集群可用的硬件磁盘

[root@ceph01 ~]# ceph orch device ls

2,添加磁盘为OSD

[root@ceph01 ~]# ceph orch daemon add osd ceph01:/dev/sdb

Created osd(s) 0 on host 'ceph01'

[root@ceph01 ~]# ceph orch daemon add osd ceph01:/dev/sdc

Created osd(s) 1 on host 'ceph01'

[root@ceph01 ~]# ceph orch daemon add osd ceph01:/dev/sdd

Created osd(s) 2 on host 'ceph01'

[root@ceph01 ~]# ceph orch daemon add osd ceph02:/dev/sdb

Created osd(s) 3 on host 'ceph02'

[root@ceph01 ~]# ceph orch daemon add osd ceph02:/dev/sdc

Created osd(s) 4 on host 'ceph02'

[root@ceph01 ~]# ceph orch daemon add osd ceph02:/dev/sdd

Created osd(s) 5 on host 'ceph02'

[root@ceph01 ~]# ceph orch daemon add osd ceph03:/dev/sdb

Created osd(s) 6 on host 'ceph03'

[root@ceph01 ~]# ceph orch daemon add osd ceph03:/dev/sdc

Created osd(s) 7 on host 'ceph03'

[root@ceph01 ~]# ceph orch daemon add osd ceph03:/dev/sdd

Created osd(s) 8 on host 'ceph03'3,查看OSD列表

[root@ceph01 ~]# ceph osd tree

ID CLASS WEIGHT TYPE NAME STATUS REWEIGHT PRI-AFF

-1 5.34389 root default

-3 1.78130 host ceph01

0 hdd 0.29300 osd.0 up 1.00000 1.00000

1 hdd 0.48830 osd.1 up 1.00000 1.00000

2 hdd 1.00000 osd.2 up 1.00000 1.00000

-5 1.78130 host ceph02

3 hdd 0.29300 osd.3 up 1.00000 1.00000

4 hdd 0.48830 osd.4 up 1.00000 1.00000

5 hdd 1.00000 osd.5 up 1.00000 1.00000

-7 1.78130 host ceph03

6 hdd 0.29300 osd.6 up 1.00000 1.00000

7 hdd 0.48830 osd.7 up 1.00000 1.00000

8 hdd 1.00000 osd.8 up 1.00000 1.000004,查看OSD状态信息

[root@ceph01 ~]# ceph osd status

ID HOST USED AVAIL WR OPS WR DATA RD OPS RD DATA STATE

0 ceph01 26.7M 299G 0 0 0 0 exists,up

1 ceph01 26.7M 499G 0 0 0 0 exists,up

2 ceph01 27.2M 1023G 0 0 0 0 exists,up

3 ceph02 26.7M 299G 0 0 0 0 exists,up

4 ceph02 26.7M 499G 0 0 0 0 exists,up

5 ceph02 27.2M 1023G 0 0 0 0 exists,up

6 ceph03 26.7M 299G 0 0 0 0 exists,up

7 ceph03 26.7M 499G 0 0 0 0 exists,up

8 ceph03 427M 1023G 0 0 0 0 exists,up 5,查看OSD的使用信息

[root@ceph01 ~]# ceph osd df

ID CLASS WEIGHT REWEIGHT SIZE RAW USE DATA OMAP META AVAIL %USE VAR PGS STATUS

0 hdd 0.29300 1.00000 300 GiB 27 MiB 588 KiB 1 KiB 26 MiB 300 GiB 0.01 0.76 0 up

1 hdd 0.48830 1.00000 500 GiB 27 MiB 588 KiB 1 KiB 26 MiB 500 GiB 0.01 0.46 0 up

2 hdd 1.00000 1.00000 1024 GiB 27 MiB 1.0 MiB 1 KiB 26 MiB 1024 GiB 0.00 0.23 1 up

3 hdd 0.29300 1.00000 300 GiB 27 MiB 588 KiB 1 KiB 26 MiB 300 GiB 0.01 0.76 0 up

4 hdd 0.48830 1.00000 500 GiB 27 MiB 588 KiB 1 KiB 26 MiB 500 GiB 0.01 0.46 0 up

5 hdd 1.00000 1.00000 1024 GiB 27 MiB 1.0 MiB 1 KiB 26 MiB 1024 GiB 0.00 0.23 1 up

6 hdd 0.29300 1.00000 300 GiB 27 MiB 588 KiB 1 KiB 26 MiB 300 GiB 0.01 0.76 0 up

7 hdd 0.48830 1.00000 500 GiB 27 MiB 588 KiB 1 KiB 26 MiB 500 GiB 0.01 0.46 0 up

8 hdd 1.00000 1.00000 1024 GiB 427 MiB 1.0 MiB 1 KiB 26 MiB 1024 GiB 0.04 3.55 1 up

TOTAL 5.3 TiB 642 MiB 6.5 MiB 14 KiB 236 MiB 5.3 TiB 0.01

MIN/MAX VAR: 0.23/3.55 STDDEV: 0.016,查看OSD的延迟信息

[root@ceph01 ~]# ceph osd perf

osd commit_latency(ms) apply_latency(ms)

8 0 0

7 0 0

6 0 0

5 0 0

4 0 0

3 0 0

2 0 0

1 0 0

0 0 07,删除OSD节点

ceph03为目标案例

· 查看被删除节点osd列表

[root@ceph01 ~]# ceph osd crush ls ceph03

osd.6

osd.7

osd.8· 迁移别删除的OSD磁盘数据

[root@ceph01 ~]# ceph osd out osd.6

marked out osd.6.

[root@ceph01 ~]# ceph osd out osd.7

marked out osd.7.

[root@ceph01 ~]# ceph osd out osd.8

marked out osd.8.

[root@ceph01 ~]# ceph osd tree

ID CLASS WEIGHT TYPE NAME STATUS REWEIGHT PRI-AFF

-1 5.34389 root default

-3 1.78130 host ceph01

0 hdd 0.29300 osd.0 up 1.00000 1.00000

1 hdd 0.48830 osd.1 up 1.00000 1.00000

2 hdd 1.00000 osd.2 up 1.00000 1.00000

-5 1.78130 host ceph02

3 hdd 0.29300 osd.3 up 1.00000 1.00000

4 hdd 0.48830 osd.4 up 1.00000 1.00000

5 hdd 1.00000 osd.5 up 1.00000 1.00000

-7 1.78130 host ceph03

6 hdd 0.29300 osd.6 up 0 1.00000

7 hdd 0.48830 osd.7 up 0 1.00000

8 hdd 1.00000 osd.8 up 0 1.00000· 关闭OSD进程

[root@ceph03 ~]# systemctl stop ceph-c0c1e638-769b-11f0-90d7-8dc9d334b679@osd.6.service

[root@ceph03 ~]# systemctl stop ceph-c0c1e638-769b-11f0-90d7-8dc9d334b679@osd.7.service

[root@ceph03 ~]# systemctl stop ceph-c0c1e638-769b-11f0-90d7-8dc9d334b679@osd.8.service· 移除crush映射

[root@ceph01 ~]# ceph osd crush remove osd.6

removed item id 6 name 'osd.6' from crush map

[root@ceph01 ~]# ceph osd crush remove osd.7

removed item id 7 name 'osd.7' from crush map

[root@ceph01 ~]# ceph osd crush remove osd.8

removed item id 8 name 'osd.8' from crush map

[root@ceph01 ~]# ceph osd tree

ID CLASS WEIGHT TYPE NAME STATUS REWEIGHT PRI-AFF

-1 3.56259 root default

-3 1.78130 host ceph01

0 hdd 0.29300 osd.0 up 1.00000 1.00000

1 hdd 0.48830 osd.1 up 1.00000 1.00000

2 hdd 1.00000 osd.2 up 1.00000 1.00000

-5 1.78130 host ceph02

3 hdd 0.29300 osd.3 up 1.00000 1.00000

4 hdd 0.48830 osd.4 up 1.00000 1.00000

5 hdd 1.00000 osd.5 up 1.00000 1.00000

-7 0 host ceph03

6 0 osd.6 down 0 1.00000

7 0 osd.7 down 0 1.00000

8 0 osd.8 down 0 1.00000

· 删除osd节点认证

[root@ceph01 ~]# ceph auth del osd.6

[root@ceph01 ~]# ceph auth del osd.7

[root@ceph01 ~]# ceph auth del osd.8· 从osd列表删除

[root@ceph01 ~]# ceph osd rm osd.6

removed osd.6

[root@ceph01 ~]# ceph osd rm osd.7

removed osd.7

[root@ceph01 ~]# ceph osd rm osd.8

removed osd.8

[root@ceph01 ~]# ceph osd tree

ID CLASS WEIGHT TYPE NAME STATUS REWEIGHT PRI-AFF

-1 3.56259 root default

-3 1.78130 host ceph01

0 hdd 0.29300 osd.0 up 1.00000 1.00000

1 hdd 0.48830 osd.1 up 1.00000 1.00000

2 hdd 1.00000 osd.2 up 1.00000 1.00000

-5 1.78130 host ceph02

3 hdd 0.29300 osd.3 up 1.00000 1.00000

4 hdd 0.48830 osd.4 up 1.00000 1.00000

5 hdd 1.00000 osd.5 up 1.00000 1.00000

-7 0 host ceph03 · 清理被删除的节点

1,删除主机桶(节点在急集群的映射)

[root@ceph01 ~]# ceph osd crush remove ceph03

removed item id -7 name 'ceph03' from crush map

[root@ceph01 ~]# ceph osd tree

ID CLASS WEIGHT TYPE NAME STATUS REWEIGHT PRI-AFF

-1 3.56259 root default

-3 1.78130 host ceph01

0 hdd 0.29300 osd.0 up 1.00000 1.00000

1 hdd 0.48830 osd.1 up 1.00000 1.00000

2 hdd 1.00000 osd.2 up 1.00000 1.00000

-5 1.78130 host ceph02

3 hdd 0.29300 osd.3 up 1.00000 1.00000

4 hdd 0.48830 osd.4 up 1.00000 1.00000

5 hdd 1.00000 osd.5 up 1.00000 1.000002,从主机列表中删除

[root@ceph01 ~]# ceph orch host rm ceph03 --force

Removed host 'ceph03'

[root@ceph01 ~]# ceph orch host ls

HOST ADDR LABELS STATUS

ceph01 10.0.0.101 _admin

ceph02 10.0.0.102

2 hosts in cluster3,被删除节点删除lvm

vgremove -f $( pvs --noheadings -o vg_name /dev/sdb) 2>/dev/null

pvremove /dev/sdb -ff

vgremove -f $( pvs --noheadings -o vg_name /dev/sdc) 2>/dev/null

pvremove /dev/sdc -ff

vgremove -f $( pvs --noheadings -o vg_name /dev/sdd) 2>/dev/null

pvremove /dev/sdd -ff记得添加回来

8,OSD新增磁盘

插入一个2TB磁盘

[root@ceph01 ~]# ceph orch device ls

。。。。。# 等的时间比较长~

ceph03 /dev/sde hdd 将磁盘添加为OSD进程

[root@ceph01 ~]# ceph orch daemon add osd ceph03:/dev/sde

Created osd(s) 9 on host 'ceph03'

[root@ceph01 ~]# ceph osd tree

ID CLASS WEIGHT TYPE NAME STATUS REWEIGHT PRI-AFF

-1 7.34389 root default

-3 1.78130 host ceph01

0 hdd 0.29300 osd.0 up 1.00000 1.00000

1 hdd 0.48830 osd.1 up 1.00000 1.00000

2 hdd 1.00000 osd.2 up 1.00000 1.00000

-5 1.78130 host ceph02

3 hdd 0.29300 osd.3 up 1.00000 1.00000

4 hdd 0.48830 osd.4 up 1.00000 1.00000

5 hdd 1.00000 osd.5 up 1.00000 1.00000

-7 3.78130 host ceph03

6 hdd 0.29300 osd.6 up 1.00000 1.00000

7 hdd 0.48830 osd.7 up 1.00000 1.00000

8 hdd 1.00000 osd.8 up 1.00000 1.00000

9 hdd 2.00000 osd.9 up 1.00000 1.00000八、存储池

1,存储池pool的类型

副本类型存储池:3

纠删码存储池:raid5

2,存储池管理

· 创建存储池

#1创建副本类型的存储池98_pool

[root@ceph01 ~]# ceph osd pool create 98_pool replicated

pool '98_pool' created

# 创建纠删码类型存储池

[root@ceph01 ~]# ceph osd pool create 98_pool02 erasure

pool '98_pool02' created

# 创建存储池,指定副本数

[root@ceph01 ~]# ceph osd pool create 98_pool03 --size 5

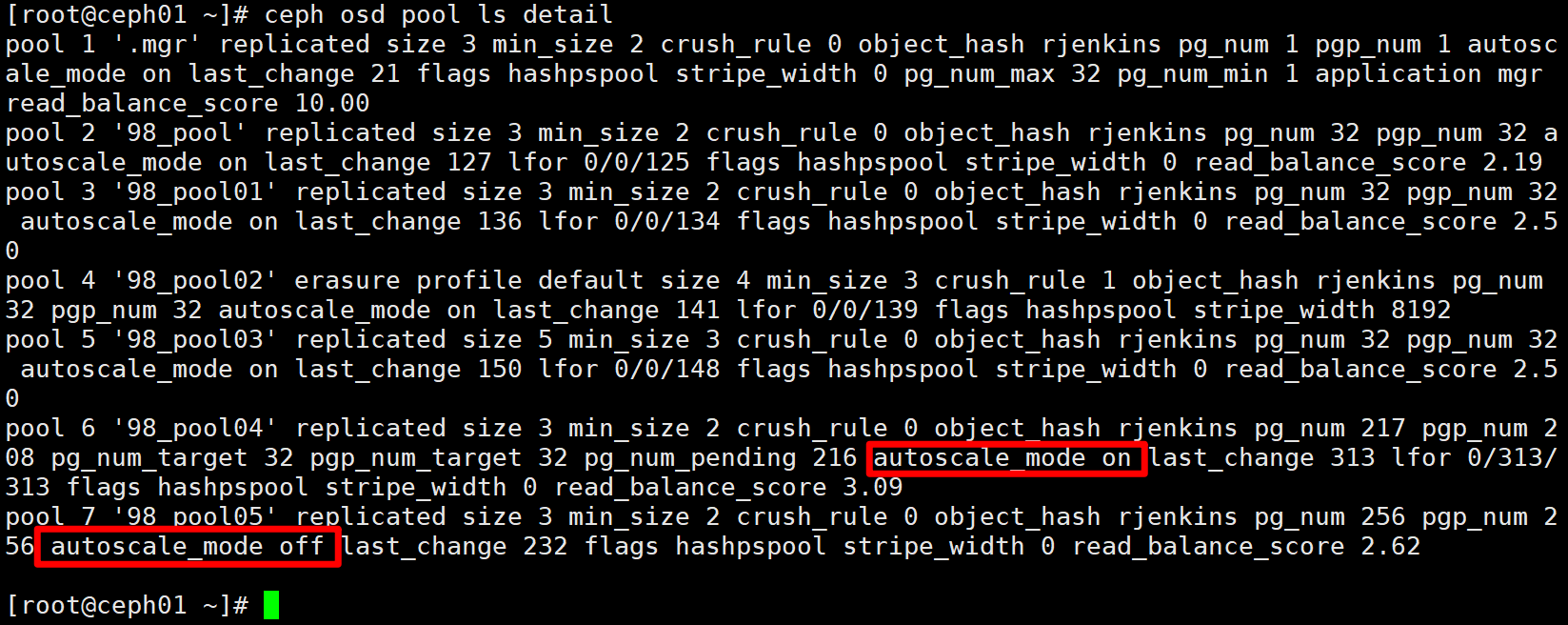

pool '98_pool03' created· 查看存储池信息

[root@ceph01 ~]# ceph osd pool ls

.mgr

98_pool

98_pool01

98_pool02

98_pool03

[root@ceph01 ~]# ceph osd pool ls detail

。。。

pool 2 '98_pool' replicated size 3 min_size 2 crush_rule 0 object_hash rjenkins pg_num 32 pgp_num 32 autoscale_mode on last_change 127 lfor 0/0/125 flags hashpspool stripe_width 0 read_balance_score 2.19

pool 3 '98_pool01' replicated size 3 min_size 2 crush_rule 0 object_hash rjenkins pg_num 32 pgp_num 32 autoscale_mode on last_change 136 lfor 0/0/134 flags hashpspool stripe_width 0 read_balance_score 2.50

pool 4 '98_pool02' erasure profile default size 4 min_size 3 crush_rule 1 object_hash rjenkins pg_num 32 pgp_num 32 autoscale_mode on last_change 141 lfor 0/0/139 flags hashpspool stripe_width 8192

pool 5 '98_pool03' replicated size 5 min_size 3 crush_rule 0 object_hash rjenkins pg_num 32 pgp_num 32 autoscale_mode on last_change 150 lfor 0/0/148 flags hashpspool stripe_width 0 read_balance_score 2.50

# 查看存储池的副本数

[root@ceph01 ~]# ceph osd pool get 98_pool05 size

size: 3

# 查看pg数量

[root@ceph01 ~]# ceph osd pool get 98_pool05 pg_num

pg_num: 256

# 查看存储池的使用状态

[root@ceph01 ~]# ceph osd pool stats

pool .mgr id 1

nothing is going on

pool 98_pool id 2

nothing is going on

pool 98_pool01 id 3

nothing is going on

pool 98_pool02 id 4

nothing is going on

pool 98_pool03 id 5

nothing is going on

pool 98_pool04 id 6

nothing is going on

pool 98_pool05 id 7

nothing is going on

· 存储池pg和pgp数量

10块磁盘:10 * 100 / 3 == 300

# --autoscale_mode=off 关闭自动伸缩;

# 如果不使用这个参数,系统会默认自动的回到默认值32;

[root@ceph01 ~]# ceph osd pool create 98_pool05 256 256 --autoscale_mode=off

· 修改存储池的设置

# 修噶存储池的副本数

[root@ceph01 ~]# ceph osd pool set 98_pool05 size 5

set pool 7 size to 5

[root@ceph01 ~]# ceph osd pool get 98_pool05 size

size: 5

#修改名称

[root@ceph01 ~]# ceph osd pool rename 98_pool 98_pool00

pool '98_pool' renamed to '98_pool00'

#修改pg数量

[root@ceph01 ~]# ceph osd pool get 98_pool00 pg_num

pg_num: 32

[root@ceph01 ~]# ceph osd pool set 98_pool00 pg_num 128

set pool 2 pg_num to 128

[root@ceph01 ~]# ceph osd pool get 98_pool00 pg_num

pg_num: 128· 删除存储池(了解)

ceph集群有两个保护机制:

nodelete 必须设置为false才能删除

mon_allow_pool_delete 必须为true才能被删除

[root@ceph01 ~]# ceph osd pool get 98_pool00 nodelete

nodelete: false

# 测试删除失败

[root@ceph01 ~]# ceph osd pool delete 98_pool00

Error EPERM: WARNING: this will *PERMANENTLY DESTROY* all data stored in pool 98_pool00. If you are *ABSOLUTELY CERTAIN* that is what you want, pass the pool name *twice*, followed by --yes-i-really-really-mean-it.

[root@ceph01 ~]# ceph osd pool delete 98_pool00 --yes-i-really-really-mean-it

Error EPERM: WARNING: this will *PERMANENTLY DESTROY* all data stored in pool 98_pool00. If you are *ABSOLUTELY CERTAIN* that is what you want, pass the pool name *twice*, followed by --yes-i-really-really-mean-it.

[root@ceph01 ~]# ceph osd pool delete 98_pool00 98_pool00 --yes-i-really-really-mean-it

Error EPERM: pool deletion is disabled; you must first set the mon_allow_pool_delete config option to true before you can destroy a pool

# 告诉集群,允许删除pool

[root@ceph01 ~]# ceph tell mon.* injectargs --mon_allow_pool_delete=true

mon.ceph01: {}

mon.ceph01: mon_allow_pool_delete = ''

mon.ceph02: {}

mon.ceph02: mon_allow_pool_delete = ''

mon.ceph03: {}

mon.ceph03: mon_allow_pool_delete = ''

# 两个条件均满足后,再尝试删除;

[root@ceph01 ~]# ceph osd pool delete 98_pool00 98_pool00 --yes-i-really-really-mean-it

pool '98_pool00' removed

[root@ceph01 ~]# ceph osd pool ls

.mgr

98_pool01

98_pool02

98_pool03

98_pool04

98_pool05生产环境nodelete要设置为:true

[root@ceph01 ~]# ceph osd pool set 98_pool01 nodelete true

set pool 3 nodelete to true